Substrate 学习

Substrate的文档高速迭代,链接可能失效,参见 https://docs.substrate.io/

使用方法

使用substrate node: In this case, you just need to supply a JSON file and launch your own blockchain. The JSON file allows you to configure the genesis state of the modules that compose the Substrate Node’s runtime, such as: Balances, Staking, and Sudo.

使用 substrate frame,frame 里有很多 pallet 可以自由选择 This affords you a large amount of freedom over your blockchain’s logic, and allows you to configure data types, select from a library of modules (called “pallets”), and even add your own custom pallets.

使用 substrate core,不使用 frame,用 wasm 来实现 the runtime can be designed and implemented from scratch. This could be done in any language that can target WebAssembly

Substrate 组件

Storage 底层存储数据库,用于持久保持底层区块链的不断变化的状态

Runtime 区块链的业务逻辑,需要达成链上共识

Peer-to-peer network P2P通信

Consensus 与其他节点达成共识

RPC Substrate 提供 HTTP 和 WebSocket 两种 RPC 接口

Telemetry 监控

搭建 PoA 私有网络

目标:创建一个自己的区块链网络,包括学会了生成自己的账号密钥、导入密钥到密钥库,并创建一个使用该密钥对的自定义创世区块配置文件(chain spec)

【Substrate 入门】创建我们自己的区块链网络 - 海阳之新的文章 - 知乎

https://zhuanlan.zhihu.com/p/340583890

PoA = Aura

The Substrate node template uses a proof of authority consensus model also referred to as authority round or Aura consensus. The Aura consensus protocol limits block production to a rotating list of authorized accounts—authorities—that create blocks in a round robin fashion.

设计哲学

Substrate 入门 - Substrate 的模型设计 -(七) - 金晓的文章 - 知乎

https://zhuanlan.zhihu.com/p/101058405

比较现代计算机的程序模型与当前的区块链模型

- 程序由指令与数据构成,对应到链上即为链上代码(例如以太坊的合约,fabric 的 chaincode,substrate 的 Runtime)和链上存储(以太坊和 fabric 都叫做世界状态 world state,Substrate 中叫 Runtime Storage,也是世界状态)

- 程序接受用户的输入,经过处理后得到输出,对应到链即为接受区块中的交易,执行后修改状态。用户可以以异步的方式去查询执行后的结果以代表执行后的输出。请注意区块由于需要经过共识的过程,因此对于结果的判定一定得等到区块的共识达成(又称为区块 finality 后),才能进行查询。因此区块链是一个异步的系统。这里接受交易的调用即是接受一个 Extrinsic,外部的的输入。

- 程序需要运行在计算机的操作系统环境里(非指代无需操作系统的程序),对应到链而言是运行链上代码所需要的一个沙盒环境,这个沙盒环境是要去除 io,网络访问等会产生“副作用”的沙盒。在以太坊中这个环境是 EVM,fabric 是 docker,substrate 中即是 Runtime 的运行环境(如 wasm 的沙盒环境)。

区分 Runtime 和其他部分

Substrate 设计总览 - 金晓的文章 - 知乎

https://zhuanlan.zhihu.com/p/56383616

除了 Runtime 以外的其他部分提供了系统的基础功能,所有的链都应该具备这些基础功能,开发者通过修改 Runtime 定制链的逻辑

需要对运行结果进行共识的功能部分应该归属于 Runtime

这里有一个简单的判定标准判断某个功能是否应该放在 Runtime 内:

对于某个功能,若只改动一个节点的代码对于所有的逻辑运行的结果与其他不改动的节点运行的结果相同,则认为这个部分应该放在 Runtime 之外,如果运行结果不同,则认为应该放在 Runtime 之内。

举个例子:比如我改变了交易池的排序代码,使得对某个账户有利的交易能优先打包。这个改动会令自己这个节点产出的区块不公平的打包交易,但是只要打包出来的区块大家都可以认可,则所有节点共识的“状态的变化”仍然是一致的。很明显,这个功能组件不应该是 Runtime 的功能,因为它不会改变对于验证一个区块时的“状态变化”的验证。比如我改变了转账功能的代码,能给某个账户凭空增加钱,那么显然,这种改动对于这个改动过的节点执行的结果将会与其他节点不同,则共识不会通过。所以转账这个功能就应该放在 Runtime 当中,让所有节点执行的都是一致的。

无分叉升级

Substrate 设计总览 - 金晓的文章 - 知乎

https://zhuanlan.zhihu.com/p/56383616

Runtime 在 Substrate 框架下,将会用同一份代码编译出两份可执行文件:

- 一份 Rust 的本地代码,我们一般称为 native 代码,native 与其他代码无异,是这个执行文件中的二进制数据,直接运行。在 Substrate 的相关代码以 native 命名

- 一份 wasm 的链上代码,我们一般称为 wasm 代码,wasm 被部署到链上,所有人可获取,wasm 通过构建一个 wasm 的运行时环境执行 。在 Substrate 的相关代码以 wasm 命名

由于这两份代码是由相同的代码编译出来的,所以其执行逻辑完全相同 (有一些很小的暗坑要注意)。其中 wasm 将会部署到链上,所有人都可以获取到,也就是说即使本地运行的不是最新版本的节点,只要同步了区块,一定可以获取到最新的 wasm 代码。

换句话说,一个写在 Runtime 内部的代码,也就是代表这条链功能性的代码,存在两份,分别是 native 与 wasm。wasm 代码被部署到链上,是“链上数据”,可以通过同步区块所有人统一获取并执行。这样就可以保证在区块链中所有矿工执行的都是最新的代码。

P.S. 这里需要强调,代码的部署可以通过“民主提议”,“sudo 控制权限”,“开发者自定一种部署条件”等方式进行,到底哪种方式“更区块链”,“更合理”,不在本文讨论范围内,这与这条链的设计目的相关。Substrate 只是提供了这种“热更新”的强大机制,如何使用这种机制是这条链的问题。

以下策略均满足:只要 wasm 的版本比 native 高,就一定执行 wasm

NativeWhenPossible: Execute with native build (if available, WebAssembly otherwise).AlwaysWasm: Only execute with the WebAssembly build.Both: Execute with both native (where available) and WebAssembly builds.NativeElseWasm: Execute with the native build if possible; if it fails, then execute with WebAssembly.

不同的部分可以有不同的执行策略:

The default execution strategies for the different parts of the blockchain execution process are:

- Syncing:

NativeElseWasm

- Block Import (for non-validator):

NativeElseWasm

- Block Import (for validator):

AlwaysWasm

- Block Construction:

AlwaysWasm

- Off-Chain Worker:

NativeWhenPossible

- Other:

NativeWhenPossible

总结:

- native 会比 wasm 运行速度更快

- wasm 能得到各节点的共识,更应该使用 wasm 来做区块生成

核心概念

DApp 是什么:DApp 是可以自主运行的应用程序,通常通过使用智能合约,在去中心化计算的区块链系统上运行。

Pallet 是什么:pallet 就是定义你的区块链能做什么的单个逻辑片段(比如 babe,grandpa,evm,contracts 都是 substrate 预先建立的 pallet)

Frame:a set of modules (pallets) and support libraries that simplify runtime development. 帮助开发 runtime

runtime:应用逻辑,It defines what transactions are valid and invalid and determines how the chain’s state changes in response to transactions. Everything other than the runtime, does not compile to Wasm, only to native. The outer node is responsible for handling peer discovery, transaction pooling, block and transaction gossiping, consensus, and answering RPC calls from the outside world.(它定义了哪些交易是有效的,哪些是无效的,并决定了链的状态如何响应交易而变化。”外部节点”,除运行时之外的一切,不编译为 Wasm,只编译为本地的。外层节点负责处理对等的发现,交易池,区块和交易的八卦,共识,以及回答来自外部世界的 RPC 调用。)

Aura

Authority round, 通过一个 authorities 列表,轮流发布区块,能够长时间在线的 authorities 就是 honest authorities。

在 Substrate 中的交易不再称为 Transaction,而是称为了 Extrinsic,中文翻译就是“外部的;外表的;外源性”,意味着被称为 Extrinsic 的概念,对于区块链而言是外部的输入。这种定义脱离了本身“交易”的范畴(更像是转账的概念),而是在链的状态的角度下,认为交易及类似概念是一种改变状态的外部输入(意味着不止转账,只要是外部的操作都是)。

Substrate 入门 - 交易体 -(六) - 金晓的文章 - 知乎

https://zhuanlan.zhihu.com/p/100770550

作者:金晓

链接:https://zhuanlan.zhihu.com/p/100770550

来源:知乎

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

An extrinsic is a piece of information that comes from outside the chain and is included in a block.

A block in Substrate is composed of a header and an array of extrinsics.

三种类型:

- Inherents: pieces of information that are not signed and only inserted into a block by the block author. 不需要签名,是由足够多 validator 同意认为是正确的东西。inherent 是 substrate 真正区别于其他区块链,也能够成为 blockchain as world computer 的原因。

- Signed transactions: contain a signature of the account that issued the transaction and stands to pay a fee to have the transaction included on chain.

- Unsigned transactions: Since the transaction is not signed, there is nobody to pay a fee.

Block produced by our node

- Our node listens for transactions on the network.

- Each transaction is **verified **and valid transactions are placed in the transaction pool.

- The pool is responsible for **ordering **the transactions and returning ones that are ready to be included in the block. Transactions in the ready queue are used to construct a block.

- Transactions are executed and state changes are stored in local memory. Transactions from the

ready queue are also propagated (gossiped) to peers over the network. We use the exact ordering as the pending block since transactions in the front of the queue have a higher priority and are more likely to be successfully executed in the next block.

- The constructed block is published to the network. All other nodes on the network receive and execute the block.

Block received from network

The block is executed and the entire block either succeeds or fails.

Weights are the mechanism used to manage the time it takes to validate a block.

Executive module: Unlike the other modules within FRAME, this is not a runtime module. Rather it is a normal Rust module that calls into the various runtime modules included in your blockchain.

Compared to oracle

Off-chain features run in their own Wasm execution environment outside of the Substrate runtime. This separation of concerns makes sure that block production is not impacted by long-running off-chain tasks.

However, as the off-chain features are declared in the same code as the runtime, they can easily access on-chain state for their computations.

The part of consensus that makes the ongoing progress of the blockchain irreversible. After a block is finalized, all of the state changes it encapsulates are irreversible without a hard fork. The consensus algorithm must guarantee that finalized blocks never need reverting. However, different consensus algorithms can define different finalization methods.

- deterministic finality, each block is guaranteed to be the canonical block for that chain when the block is included. Deterministic finality is desirable in situations where the full chain is not available, such as in the case of light clients. GRANDPA is the deterministic finality protocol that is used by the Polkadot Network.

- probabilistic finality, finality is expressed in terms of a probability, denoted by

p, that a proposed block, denoted by B, will remain in the canonical chain. As more blocks are produced on top of B, p approaches 1.

- instant finality, finality is guaranteed immediately upon block production. This type of non-probabilistic consensus tends to use practical byzantine fault tolerance (pBFT) and have expensive communication requirements.

Runtime 开发

Framework for Runtime Aggregation of Modularized Entities (FRAME) is a set of modules (pallets) and support libraries that simplify runtime development.

一个 pallet 的结构:

- Imports and Dependencies

- Declaration of the Pallet type

- Runtime Configuration Trait

- Runtime Storage

- Runtime Events

- Hooks

- Extrinsics

Macro

pallet attribute macro

// 1. Imports and Dependencies

pub use pallet::*;

#[frame_support::pallet]

pub mod pallet {

use frame_support::pallet_prelude::*;

use frame_system::pallet_prelude::*;

// 2. Declaration of the Pallet type

// This is a placeholder to implement traits and methods.

// https://paritytech.github.io/substrate/master/frame_support/attr.pallet.html#pallet-struct-placeholder-palletpallet-mandatory

// To generate a `Store` trait associating all storages, use the attribute `#[pallet::generate_store($vis trait Store)]`

#[pallet::pallet]

#[pallet::generate_store(pub(super) trait Store)]

pub struct Pallet<T>(_);

// 3. Runtime Configuration Trait

// All types and constants go here.

// Use #[pallet::constant] and #[pallet::extra_constants]

// to pass in values to metadata.

#[pallet::config]

pub trait Config: frame_system::Config { ... }

// 4. Runtime Storage

// Use to declare storage items.

#[pallet::storage]

#[pallet::getter(fn something)]

pub type MyStorage<T: Config> = StorageValue<_, u32>;

// 5. Runtime Events

// Can stringify event types to metadata.

#[pallet::event]

#[pallet::generate_deposit(pub(super) fn deposit_event)]

pub enum Event<T: Config> { ... }

// 6. Hooks

// Define some logic that should be executed

// regularly in some context, for e.g. on_initialize.

// generate a default implementation

#[pallet::hooks]

impl<T: Config> Hooks<BlockNumberFor<T>> for Pallet<T> { ... }

// 7. Extrinsics

// Functions that are callable from outside the runtime.

#[pallet::call]

impl<T:Config> Pallet<T> { ... }

}

现存的 pallet

对于每个 pallet 提供存储项、外在调用、事件、常量和错误的信息。

元数据不变,所以旧区块上的元数据可能是过期的。只有在链的 runtime spec_version 变的时候,metadata 才会变。

rust 调用函数为 state_getMetadata

存储允许在区块链中存数据,这些数据持续存在,runtime logic 可以访问。

Storage 这个 module 是 substrate 的存储 Api,可以自选最适合的存储决定。

Storage Map

key-value 对实现,用来管理其元素会被随机访问而不是按顺序完整迭代的 items

Iterating over Storage Maps

可迭代,因为 map 经常用于跟踪无限制的数据,所以必须谨慎。

用来确认一个 call 的来源

三种类型

1:root

2:sighed

3:unsighed

当一个托盘想把运行时的变化或条件通知外部实体,如用户、chain explorers 或 dApps 时,它可以使用事件。

可以定义事件的信息和这些事件何时被发出。

//用宏创建

#[pallet::event]

#[pallet::metadata(u32 = "Metadata")]

_pub_ _enum_ Event<T: Config> {

/// Set a value.

ValueSet(_u32_, T::AccountId),

}

在 pallet config 里去实现 event

#[pallet::config]

pub trait Config: frame_system::Config {

/// The overarching event type.

type Event: From<Event<Self>> + IsType<<Self as frame_system::Config>::Event>;

}

// runtime/src/lib.rs event事件需要对runtime暴露

impl template::Config for Runtime {

type Event = Event;

}

// runtime/src/lib.rs

construct_runtime!(

pub enum Runtime where//支持 Runtime generic type 需要使用where子句

Block = Block,

NodeBlock = opaque::Block,

UncheckedExtrinsic = UncheckedExtrinsic

{

// --snip--

TemplateModule: template::{Pallet, Call, Storage, Event<T>},

//--add-this------------------------------------->^^^^^^^^

}

);

事件的存储(使用宏)

// 1. Use the `generate_deposit` attribute when declaring the Events enum.

#[pallet::event]

#[pallet::generate_deposit(pub(super) fn deposit_event)]

//super和self都是rust里的关键词,一个指向parent scope(或是当前mod以外的scope),一个指向当前的scope

#[pallet::metadata(...)]

pub enum Event<T: Config> {

// --snip--

}

// 2. Use `deposit_event` inside the dispatchable function

#[pallet::call]

impl<T: Config> Pallet<T> {

#[pallet::weight(1_000)]

pub(super) fn set_value(

origin: OriginFor<T>,

value: u64,

) -> DispatchResultWithPostInfo {

let sender = ensure_signed(origin)?;

// 默认调用deposit_event来将事件写入存储

Self::deposit_event(RawEvent::ValueSet(value, sender));

}

}

每个 block 只存储这个区块开始之后发生的事件。

deposit_even 可改写。

事件支持的类型

可以接受所有支持 Parity SCALE 编解码器的类型,如果是 Runtime generic type 需要使用 where 子句来定义。(在上文 construct_runtime!里)

对事件的响应

不可以查询事件,但是可以看到 pallet 存储着的事件的列表信息。或者是使用前端借口可以对 event 进行订阅。

事件可能会出现的错误

鉴于 rust 的错误处理比较优雅,所以主要在返回结果类型里做特殊处理。

Err

DispatchResult:调用结果返回 DispatchError 是 DispatchResult 的一个变体,表示可调度函数遇到了错误

//重写自己pallet特别的DispatchError by macro

#[pallet::error]

pub enum Error<T> {

/// Error names should be descriptive.

InvalidParameter,

/// Errors should have helpful documentation associated with them.

OutOfSpace,

}

可以使用 ensure!宏来检查预设条件,不满足预设条件也会返回 error

例子:Events and Errors by completing the Substrate Kitties tutorial

pallet-transaction-payment

Substrate 区块链应用的交易费用设计

收费目的:

1:激励服务提供方即开发团队和节点

2:调节资源利用率

计算费用的方式

参数(base fee, weight fee, length fee, tip)

base fee:一笔交易的最低金额

weight fee:与交易消耗的执行时间成比例。在有限的区块生成时间和链上状态的限制下,weight 被用来定义交易产生的计算复杂度即所消耗的计算资源,以及占据的链上状态。

length fee:编码长度有关

tip:可选小费,优先级

使用 transaction-payment pallet 来实现

提供的是基础的收费逻辑

提供一些配置的特征(比如 Config::WeightToFee 将一个 weight 值转换成基于货币类型的可扣除费用;Config::FeeMultiplierUpdate,根据上一个区块结束时链的最终状态,通过定义一个乘数来更新下一个区块的费用;使用 Config::OnChargeTransaction 管理交易费用的提取、退款和存款。)

inclusion_fee = base_fee + length_fee + [targeted_fee_adjustment * weight_fee];

final_fee = inclusion_fee + tip;

余额不足的账户一般不会执行交易,因为有 transaction queue 和 block-making logic 去执行检查。

提供 FeeMultiplierUpdate 这种参数让使用者可以配置,去实现对 weight 的调节(如果这个区块比较饱和,那 weight 可以提高)

有特殊要求的交易

比如质押金,比如存款

默认的权重注释

substrate 里的可调度函数必须指定一个 weight,注释帮助我们确定 weight 值的设定,也可以帮助我们更有效地优化代码

weight 相关的注释应当要包含对 runtime 方法的执行成本有明显影响的部分。比如:存储相关的操作 (read, write, mutate, etc.)|Codec(Encode/Decode)相关操作(序列化/反序列化 vecs 或者大的结构体)|search/sort 等成本高的计算 | 调用其他 pallet 中的方法

#[pallet::weight(100_000)]

fn my_dispatchable() {

// ...

}

//ExtrinsicBaseWeight会自动添加到声明的这个weight里,方便考虑到简单将一个空的外在因素纳入一个块中的成本

//

#[pallet::weight(T::DbWeight::get().reads_writes(1, 2) + 20_000)]

fn my_dispatchable() {

// ...

}

调用类 Dispatch Class

Normal(正常用户能触发的 transaction,消耗一个区块的一部分 total weight limit,可以用 AvailableBlockRatio 去找到), Operational(由网络中的管理员或者管理委员会共同触发,提供网络能力的调度,这些类型的 dispatch 会消耗一整个区块的全部 weight 限制,不受 AvailableBlockRatio 的约束,优先权最大,并且不需要支付 tip), and Mandatory(被包含在一个块中, 即使它超过了 total weight limit。 应用于 inherents, 代表作为块验证过程一部分的功能)

//默认DispatchClass是normal

//最后的参数是决定是否根据注释的权重向用户收费,默认为yes

#[pallet::weight((100_000, DispatchClass::Operational), Pays::No)]

fn my_dispatchable() {

// ...

}

动态 weights

使用 Substrate 预定义的 FunctionOf 结构体, 增加对函数的输入的考虑。FunctionOf 接收三个数据,a) 一个根据参数计算权重的 closure 表达式; b) 固定交易级别或计算交易级别的 closure; c) 设置 pays_fee 的布尔值。

#[pallet::weight(FunctionOf(

|args: (&Vec<User>,)| args.0.len().saturating_mul(10_000),

DispatchClass::Normal,

Pays::Yes,

))]

fn handle_users(origin, calls: Vec<User>) {

// Do something per user

}

派送后的重量校正

可能消耗重量比之前规定的要少,为了修正,返回一个不一样的返回类型

#[pallet::weight(10_000 + 500_000_000)]

fn expensive_or_cheap(input: u64) -> DispatchResultWithPostInfo {

let was_heavy = do_calculation(input);

if (was_heavy) {

// None means "no correction" from the weight annotation.

Ok(None.into())

} else {

// Return the actual weight consumed.

Ok(Some(10_000).into())

}

}

自定义的费用

可以自己实现。但是必须要满足以下 trait

- [

WeighData<T>]: To determine the weight of the dispatch.

- [

ClassifyDispatch<T>]: To determine the class of the dispatch.

- [

PaysFee<T>]: To determine whether the dispatchable’s sender pays fees.

内附代码实现,需要自定义的时候可以参考。

因为 substrate 要求以固定的目标区块时间来生产快,所以区块链只能在每个区块里执行有限数量的 extrinsics。执行一个 extrinsic 的时间会根据计算复杂性、存储复杂性、使用的硬件和许多其他因素而变化。

substrate 不使用 gas metering 来计算 extrinsic 是因为这引入了大量的额外开销,使用 benchmark 提供一个在最坏情况下的最大近似值。先扣费,之后可以退还。

Denial-of-service (DoS) 是分布式系统(包括区块链网络)的一个常见攻击媒介。也就是说用户可以反复执行需要大量算力的 extrinsic,所以系统必须收费才能让用户不滥用网络。benchmark 作为一个 pallet 的功能是尽可能估计准确的成本,通过在模拟运行时环境中多次执行一个托盘的外部因素,并跟踪执行时间来实现。

benchmarks! {

benchmark_name {

/* set-up initial state */

}: {

/* the code to be benchmarked */

} verify {

/* verifying final state */

}

}

//定义初始状态,测量执行时间,以及数据库的读写次数。最后验证运行时间的最终状态验证是否完成了预期。

1:日志,有 debug 和 info 的宏来帮助完成

log::info!("called by {:?}", who);

2:Printable trait, 用它来打印(u8、u32、u64、usize、&[u8]、&str 已实现,自定义类型可自行补充)

use sp_runtime::traits::Printable;

use sp_runtime::print;

#[frame_support::pallet]

pub mod pallet {

// The pallet's errors

#[pallet::error]

pub enum Error<T> {

/// Value was None

NoneValue,

/// Value reached maximum and cannot be incremented further

StorageOverflow,

}

impl<T: Config> Printable for Error<T> {

fn print(&self) {

match self {

Error::NoneValue => "Invalid Value".print(),

Error::StorageOverflow => "Value Exceeded and Overflowed".print(),

_ => "Invalid Error Case".print(),

}

}

}

}

3:Substrate’s 自带的 print 函数

_use_ sp_runtime::print;

_pub_ _fn_ do_something(origin) -> DispatchResult {

print!("Execute do_something");

}

4;if std

当你不仅仅想打印,也不想用什么 trait 的时候可以用 if_std!

//使用if_std!,但是只有运行native的时候才会执行

use sp_std::if_std; // Import into scope the if_std! macro.

#[pallet::call]

impl<T: Config<I>, I: 'static> Pallet<T, I> {

// --snip--

pub fn do_something(origin) -> DispatchResult {

let who = ensure_signed(origin)?;

let my_val: u32 = 777;

Something::put(my_val);

if_std! {

// This code is only being compiled and executed when the `std` feature is enabled.

println!("Hello native world!");

println!("My value is: {:#?}", my_val);

println!("The caller account is: {:#?}", who);

}

Self::deposit_event(RawEvent::SomethingStored(my_val, who));

Ok(())

}

// --snip--

}

1:单元测试,使用现成 cargo 的模块

cargo test <optional: test_name>

2:模拟 runtime 环境

需要配置 configuration type,是一个 rust 里的枚举变量。

frame_support::construct_runtime!(

pub enum Test where

Block = Block,

NodeBlock = Block,

UncheckedExtrinsic = UncheckedExtrinsic,

{

System: frame_system::{Pallet, Call, Config, Storage, Event<T>},

TemplateModule: pallet_template::{Pallet, Call, Storage, Event<T>},

}

);

impl frame_system::Config for Test {

// -- snip --

type AccountId = u64;

}

3:模拟 runtime storage

使用 TestExternalities 来实现,用于测试里的模拟存储。是 substrate_state_machine 里的一个基于 hashhmap 的外部实现的别名。

pub struct ExtBuilder;

//ExtBuilder是TestExternalities的一个实例

impl ExtBuilder {

pub fn build(self) -> sp_io::TestExternalities {

let mut t = system::GenesisConfig::default().build_storage::<TestRuntime>().unwrap();

let mut ext = sp_io::TestExternalities::new(t);

ext.execute_with(|| System::set_block_number(1));

ext

}

}

搭配一个创世配置可以构建模拟的运行环境。

pub struct GenesisConfig<T: Config<I>, I: 'static = ()> {

pub balances: Vec<(T::AccountId, T::Balance)>,

}

4:区块生产

通过模拟区块生产来验证预期行为在区块生产中是否成立。

//在所有以System::block_number()为唯一输入的模块的on_initialize和on_finalize调用之间递增System模块的块号

//

fn run_to_block(n: u64) {

while System::block_number() < n {

if System::block_number() > 0 {

ExamplePallet::on_finalize(System::block_number());

System::on_finalize(System::block_number());

}

System::set_block_number(System::block_number() + 1);

System::on_initialize(System::block_number());

ExamplePallet::on_initialize(System::block_number());

}

}

#[test]

fn my_runtime_test() {

with_externalities(&mut new_test_ext(), || {

assert_ok!(ExamplePallet::start_auction());

run_to_block(10);

assert_ok!(ExamplePallet::end_auction());

});

}

5:对 events 进行测试

#[test]

fn subtract_extrinsic_works() {

new_test_ext().execute_with(|| {

assert_ok!(<test_pallet::Pallet<Test>>::subtract(Origin::signed(1), 42, 12));

let event = <frame_system::Pallet<Test>>::events().pop()

.expect("Expected at least one EventRecord to be found").event;

assert_eq!(event, mock::Event::from(test_pallet::Event::Result(42 - 12)));

});

}

确定性的随机性 Deterministic randomness:看似是随机的,其实是根据密码学而无法预测的伪随机值。

Substrate 的随机性特质:trait Randomness 提供了生成随机性的逻辑和消耗随机性的逻辑的接口。

Consuming randomness:类似于 python 里的 random(),1:random_seed,同一个 block 里多次调用结果一样 2:random,随机出来的结果对上下文来说是独特的。

Generating randomness:Substrate 有两种实现随机性特征的方法。1:the Randomness Collective Flip Pallet:基于集体掷硬币,性能高,不安全,只能在测试中使用 2:the BABE pallet, 使用可验证的随机函数,可以提供生产级别的随机性,并在 Polkadot 中使用。选择这个随机性源决定了你的区块链使用 Babe 共识。(?)

Security properties:随机性为 substrate 运行提供了一个方便实用的方法,但是没有保证安全性,需要开发人员手动保证使用的随机源可以满足所有需要消耗随机性的 pallet 的要求。

是一个配置信息的集合,规定区块链节点将连接到哪个网络,它最初将与哪些实体进行通信,以及它在创建时必须具备哪些 consensus-critical state。

包含的结构:

ClientSpec:包含 client 需要的配置信息,这些信息大部分用于与网络中的其他各方进行通信。(创世后可修改)

The genesis state:consensus-critical genesis configuration,必须在这个初始状态上达成一致,然后才能就任何后续区块达成一致。因此,这个信息必须在链的一开始就建立,此后如果不启动一个全新的区块链就不能改变。没有命令行标志可以覆盖链规范的创世部分的值。

存储方式:

Rust 代码,存储在每个 client 里,这样可以确保节点知道如何连接到至少一个链(不需要额外的信息)

json 文件(所以可以直接上传一个 json 文件创建一个区块链)

如何启动一个链:

Node operator 每次启动一个节点的时候,都提供一个 chain specification, 被硬编码斤二进制文件里。这个 chain specification 可以被导出成一个 json 文件,也可以导入这个 json 文件创建一个标准化链。

substrate build-spec > myCustomSpec.json

substrate --chain=myCustomSpec.json

Upgrade

The System library defines the set_code call that is used to update the definition of the runtime.

The Forkless Upgrade a Chain tutorial describes the details of FRAME runtime upgrades and demonstrates two mechanisms for performing them.

Tutorial: Initiate a Forkless Runtime Upgrade | Substrate_

Runtime versioning

pub const VERSION: RuntimeVersion = RuntimeVersion {

spec_name: create_runtime_str!("node-template"),

impl_name: create_runtime_str!("node-template"),

authoring_version: 1, // 区块产生接口

spec_version: 1, // Runtime 版本

impl_version: 1, // spec的实现版本

apis: RUNTIME_API_VERSIONS, // 一组runtime API 的Version

transaction_version: 1,

};

一旦 WASM runtime 升级了,会检查 native runtime 的版本是否对应,不一致的话就会去运行 WASM runtme 的 code

Storage migrations

改变已经存在的数据的属性

通过 FRAME 改变:

FRAME storage migrations are implemented by way of the OnRuntimeUpgrade trait, which specifies a single function, on_runtime_upgrade

tight coupling(两组类之间相互依赖) , loose coupling(一个类使用另一个类的 pub 接口)

代码解读

Substrate 代码导读:node-template - Kaichao 的文章 - 知乎

https://zhuanlan.zhihu.com/p/123167097

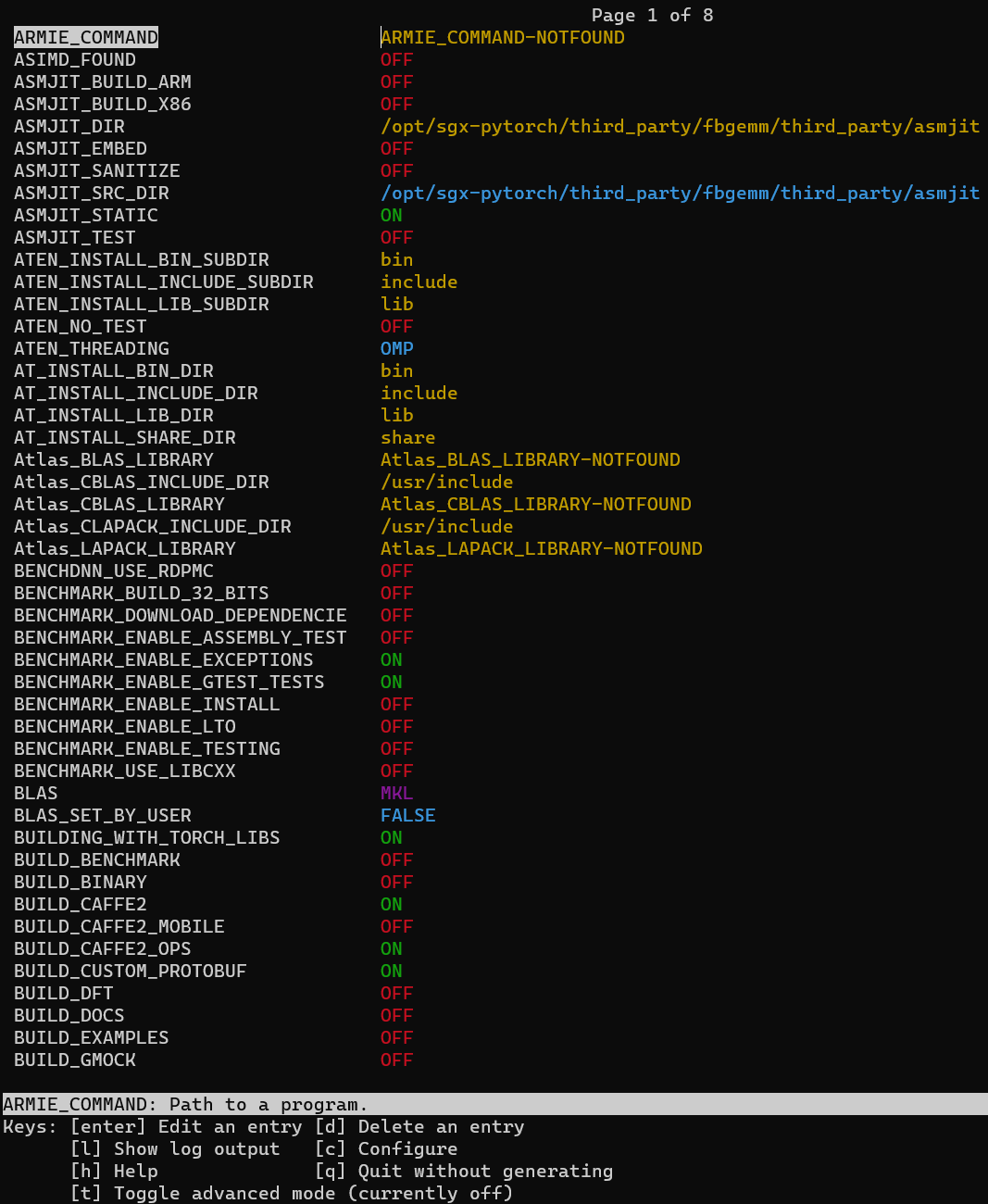

创建或引入一个包进入 Runtime 的 wasm:

Substrate 入门 - Runtime 的 wasm 与 native -(九) - 金晓的文章 - 知乎

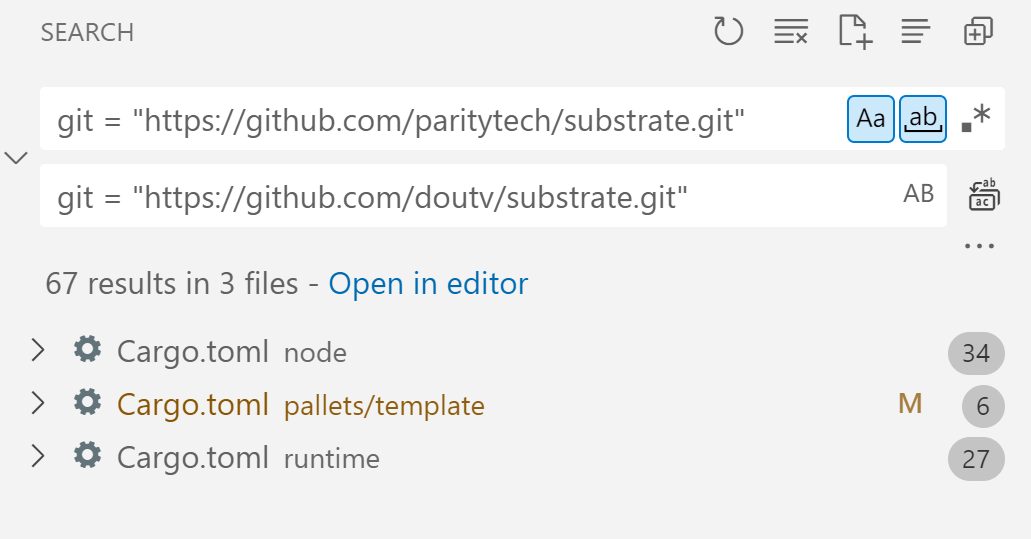

Runtime 依赖树中的 crate 的 Cargo.toml 的编写

Substrate 入门 - Substrate 的模型设计 -(七) - 金晓的文章 - 知乎

虽然在 Substrate 中的 Runtime 是由 Rust 编写,可以编译成为 Rust 的 native 代码及 wasm 代码,但其本质上并非能调用到 Rust 的所有 std 库提供的接口,同时若引入新的库,若只支持 std,不支持 no_std 的库也是无法通过编译的。

[dependencies]

# 在 dependencies 中,一定要有 default-features = false

frame-system = { version = "2.0.0", default-features = false, path = "../system" }

[features]

default = ["std"] # 默认启用的feature是std

std = [

"serde",

# ...

]

- 在该 crate 的根目录下的

lib.rs 的前几行:

// 若没有在std的条件编译下,则对于该crate采用`no_std`

#![cfg_attr(not(feature = "std"), no_std)]

Runtime 依赖树中的 crate 项目内容的编写

对于这个 crate 项目,需要注意的有以下几点:

- lib.rs 文件中的第一行必须有

#![cfg_attr(not(feature = "std"), no_std)]

- 引入标准库的类型,如

Vec, Result,BTreeMap 等等,必须通过 sp-std 这个库引入。

- 在 Runtime 的依赖库中,不能出现没有在

sp-std 导入的原本 std 拥有的标准库类型及宏,例如 String,宏 println!,若一定要出现,那么需要通过条件编译 #[cfg(feature = "std")] 包起来,那么被条件编译包起来的部分,显然在编译 wasm 的时候不会被编译进去,那么就必须得保证即使 wasm 没有编译这部分逻辑,那么 native 与 wasm 的执行结果也必须保持一致 (例如只有 println 在里面的话,只会产生的在 native 下打印的效果,不会影响执行的结果。但是若是有操作逻辑改变了变量状态在条件编译中,那么是一定要禁止的,否则就会导致节点运行过程中产生不同的结果)

引入一个第三方库兼容 Runtime wasm 的编译环境

请分清引入这个库的作用,确保这份代码的执行必须在 Runtime 内部,若确定只能在 Runtime 内部,那么只能尝试将其改成能满足前面说的条件的情况,并且其一系列依赖也要满足条件,若不确定只在 Runtime 内部运行,那么只把定义抽离出来,将实现通过 runtime_interface 导出到 native 执行

若这个库只需要在 native 下执行(如 serde),那么使用 Optional 引入,只在 std 下编译。

因此若一个第三方库一定要引入 Runtime 的编译依赖中,请再三思量是否是必须要引入的,因为这并非一件简单的事情。一方面引入新的库,编译会造成 wasm 文件庞大(因为会引入很多依赖一同编译),一方面将一个库改造成能在 Runtime wasm 下编译需要很多工作量。

Macro expand 宏展开

Substrate 入门 - 学习 Runtime 必备的技能 -(十一) - 金晓的文章 - 知乎

https://zhuanlan.zhihu.com/p/110185152

cargo expand: https://github.com/dtolnay/cargo-expand

runtime/src/lib.rs

**cargo install cargo-expand**

cd runtime

cargo expand > expand.rs

# expand.rs 中会展开 runtime/src/lib.rs 所有的宏

// 展开前

_// Define the types required by the Scheduler pallet._

parameter_types! {

_pub_ MaximumSchedulerWeight: Weight = 10_000_000;

_pub_ _const_ MaxScheduledPerBlock: u32 = 50;

}

// 展开后

_pub_ _struct_ MaxScheduledPerBlock;

_impl_ MaxScheduledPerBlock {

_ /// Returns the value of this parameter type._

_pub_ _const_ _fn_ get() -> u32 {

50

}

}

_impl_<I: From<u32>> ::frame_support::traits::Get<I> _for_ MaxScheduledPerBlock {

_fn_ get() -> I {

I::from(Self::get())

}

}

construct_runtime!(

_pub_ _enum_ Runtime _where_

Block = Block,

NodeBlock = opaque::Block,

UncheckedExtrinsic = UncheckedExtrinsic

{

...

}

// 会递归展开成一段很长的代码

pallets/template/src/lib.rs

Rust 语法知识:

_const_ _: () = {

_impl_<T> core::cmp::Eq _for_ Pallet<T> {}

};

_// The struct on which we build all of our Pallet logic._

#[pallet::pallet]

#[pallet::generate_store(_pub_(super) _trait_ Store)]

_pub_ _struct_ Pallet<T>(_);

// 展开为

_pub_ _struct_ Pallet<T>(frame_support::sp_std::marker::PhantomData<(T)>);

// 未命名常量

_const_ _: () = {

_impl_<T> core::clone::Clone _for_ Pallet<T> {

_fn_ clone(&self) -> Self {

Self(core::clone::Clone::clone(&self.0))

}

}

};

_const_ _: () = {

_impl_<T> core::cmp::Eq _for_ Pallet<T> {}

};

_const_ _: () = {

_impl_<T> core::cmp::PartialEq _for_ Pallet<T> {

_fn_ eq(&self, other: &Self) -> bool {

true && self.0 == other.0

}

}

};

_const_ _: () = {

_impl_<T> core::fmt::Debug _for_ Pallet<T> {

_fn_ fmt(&self, fmt: &_mut_ core::fmt::Formatter) -> core::fmt::Result {

fmt.debug_tuple("Pallet").field(&self.0).finish()

}

}

};

Runtime versioning

与无分叉升级有关

// runtime/src/lib.rs

// https://docs.substrate.io/v3/runtime/upgrades/#runtime-versioning

// In order for the [executor](https://docs.substrate.io/v3/advanced/executor/) to be able to select the appropriate runtime execution environment, it needs to know the `spec_name`, `spec_version` and `authoring_version` of both the native and Wasm runtime.

#[sp_version::runtime_version]

_pub_ _const_ VERSION: RuntimeVersion = RuntimeVersion {

spec_name: create_runtime_str!("node-template"),

impl_name: create_runtime_str!("node-template"),

authoring_version: 1,

_ // The version of the runtime specification. A full node will not attempt to use its native_

_ // runtime in substitute for the on-chain Wasm runtime unless all of `spec_name`,_

_ // `spec_version`, and `authoring_version` are the same between Wasm and native._

_ // This value is set to 100 to notify Polkadot-JS App (https://polkadot.js.org/apps) to use_

_ // the compatible custom types._

spec_version: 102,

impl_version: 1,

apis: RUNTIME_API_VERSIONS,

transaction_version: 1,

state_version: 1,

};